We present SDFit, a novel render-and-compare framework which recovers 3D object pose and shape from a single image. At the core of this lies a 3D morphable signed-distance function (mSDF), and exploiting recent foundational models.

Recovering 3D object pose and shape from a single image is a challenging and ill-posed problem. This is due to strong (self-)occlusions, depth ambiguities, the vast intra- and inter-class shape variance, and the lack of 3D ground truth for natural images. Existing deep-network methods are trained on synthetic datasets to predict 3D shapes, so they often struggle generalizing to real-world images. Moreover, they lack an explicit feedback loop for refining noisy estimates, and primarily focus on geometry without directly considering pixel alignment. To tackle these limitations, we develop a novel render-and-compare optimization framework, called SDFit. This has three key innovations: First, it uses a learned category-specific and morphable signed-distance-function (mSDF) model, and fits this to an image by iteratively refining both 3D pose and shape. The mSDF robustifies inference by constraining the search on the manifold of valid shapes, while allowing for arbitrary shape topologies. Second, SDFit retrieves an initial 3D shape that likely matches the image, by exploiting foundational models for efficient look-up into 3D shape databases. Third, SDFit initializes pose by establishing rich 2D-3D correspondences between the image and the mSDF through foundational features. We evaluate SDFit on three image datasets, i.e., Pix3D, Pascal3D+, and COMIC. SDFit performs on par with SotA feed-forward networks for unoccluded images and common poses, but is uniquely robust to occlusions and uncommon poses. Moreover, it requires no retraining for unseen images. Thus, SDFit contributes new insights for generalizing in the wild.

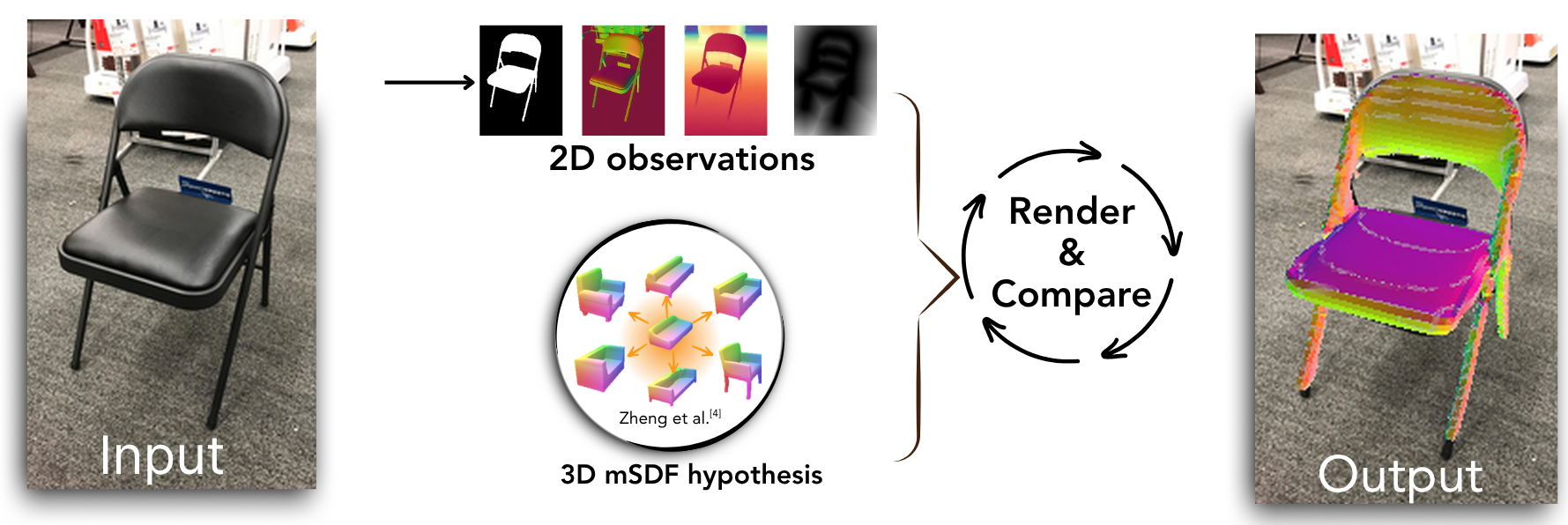

SDFit represents object shape via a morphable Signed Distance Function (mSDF) and jointly estimates pose and shape by minimizing a render-and-compare objective. The pipeline:

Figure: High-level overview of SDFit.

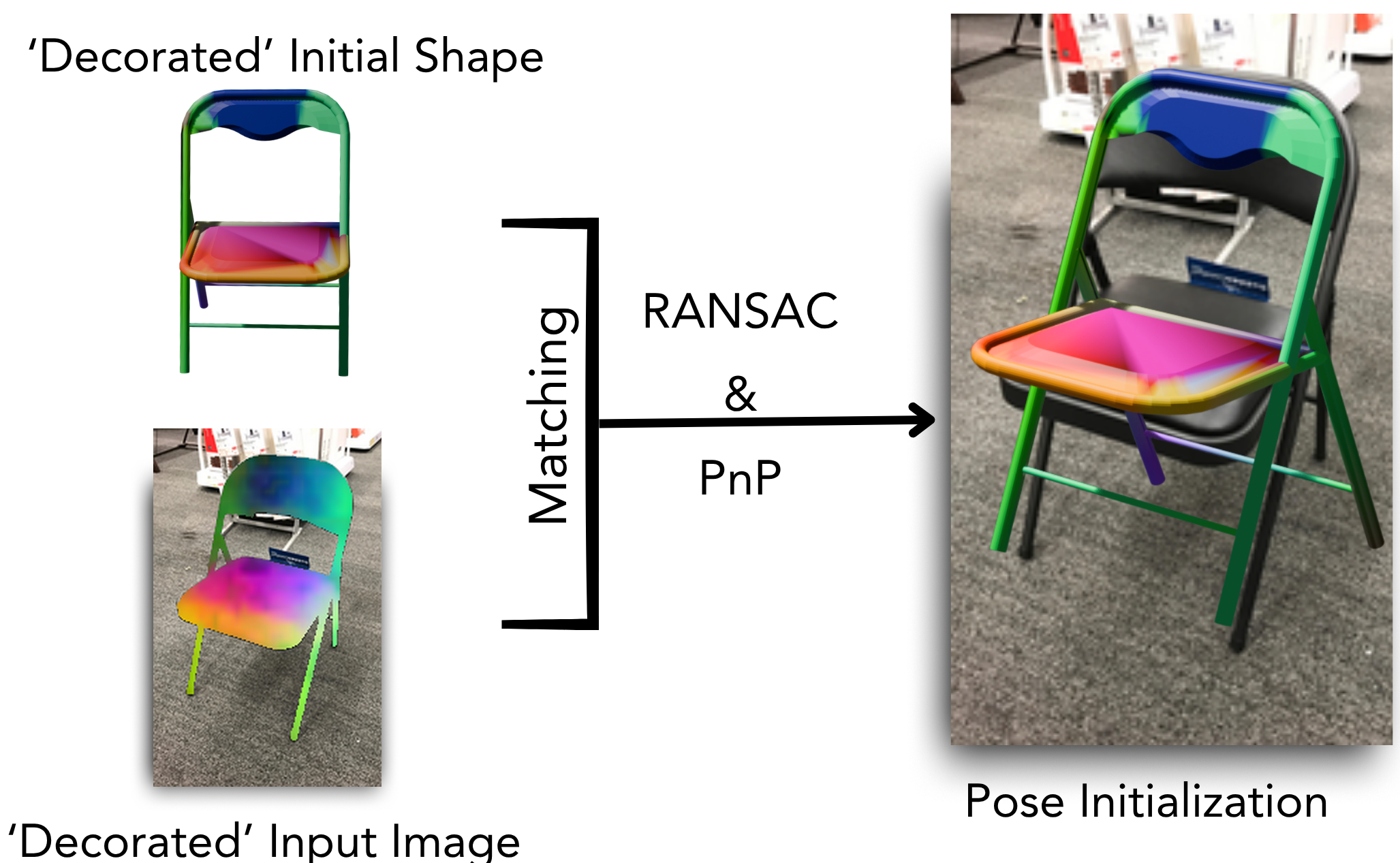

Pose initialization via dense 2D–3D correspondences.

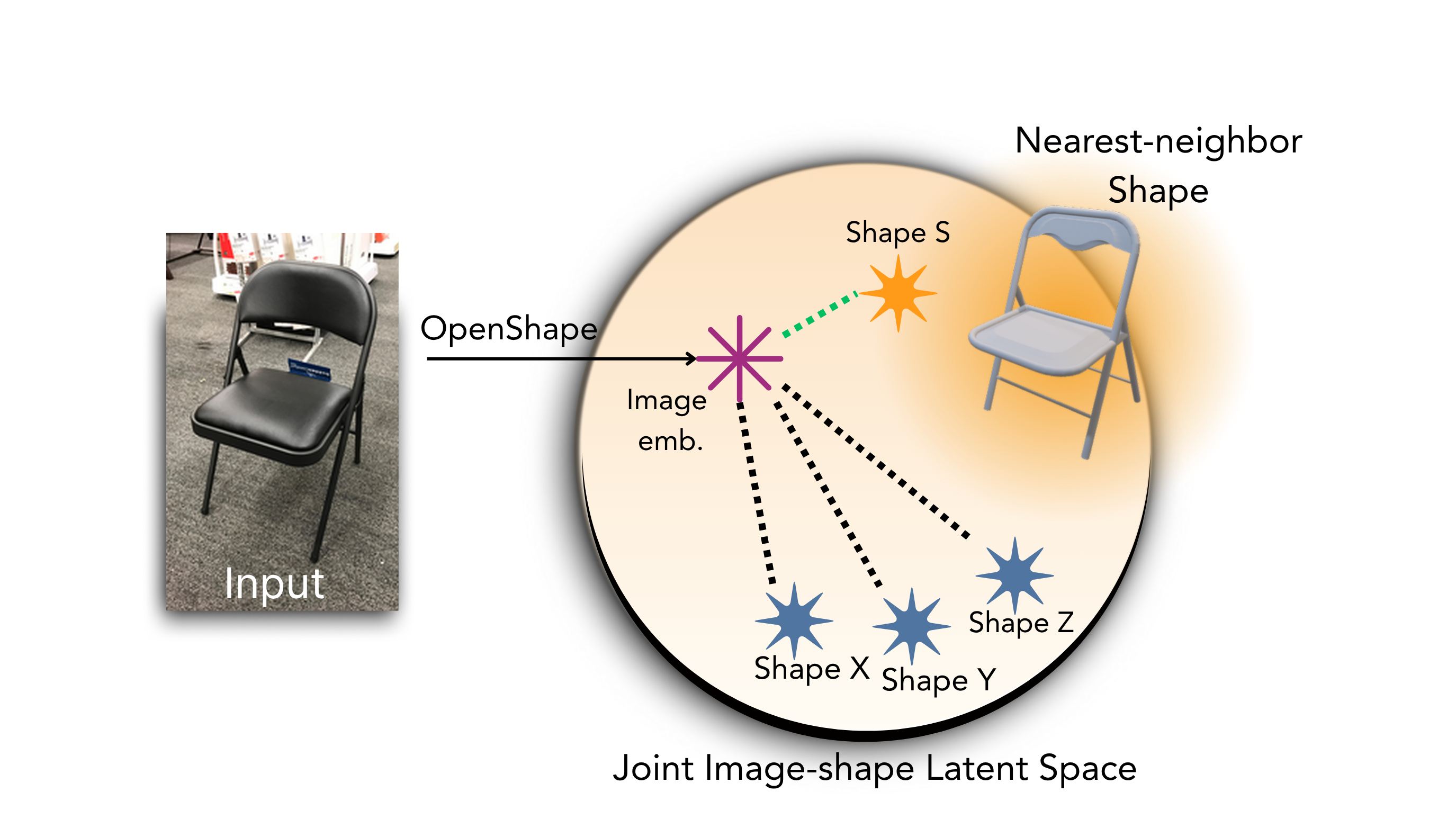

Shape retrieval/initialization using foundation models.

@inproceedings{antic2025sdfit,

title = {{SDFit}: {3D} Object Pose and Shape by Fitting a Morphable {SDF} to a Single Image},

author = {Anti\'{c}, Dimitrije and Paschalidis, Georgios and Tripathi, Shashank and Gevers, Theo and Dwivedi, Sai Kumar and Tzionas, Dimitrios},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2025},

}

Our method builds upon prior open-source efforts. We thank the authors for releasing their code and models: Deep Implicit Templates, nvdiffrast, FlexiCubes, OpenShape, and Diff3F.

Acknowledgements: We thank Božidar Antić, Yuliang Xiu and Muhammed Kocabas for useful insights. We acknowledge EuroHPC JU for awarding the project ID EHPC-AI-2024A06-077 access to Leonardo BOOSTER. This work also used the Dutch national e-infrastructure with the support of the SURF Cooperative using grant no. EINF-7589. This work is partly supported by the ERC Starting Grant (project STRIPES, 101165317, PI: D. Tzionas). D. Tzionas has received a research gift from Google, and from the NVIDIA Academic Grant Program.